Boot this time with the virtio net drivers from the CD. I have no idea only i tried with FreeBSD 10 (on FreeNAS) and bhyve was not supporting.

Proxmox FreeNAS - architecture Recently we have been working on a new Proxmox VE cluster based on Ceph to host STH. During the process we have been learning quite a bit experimenting with the system. While the using either FreeNAS or OmniOS + Napp-it has been extremely popular, KVM and containers are where the heavy investment is at right now.

Proxmox VE 4.0 swapped OpenVZ containers for LXC containers. While we wish Proxmox made the jump and supported Docker native, LXC is at least a positive step. For those wondering what an All-in-One configuration is, it is simply a hypervisor and shared storage (with inter-VM networking) built-in. That makes it an excellent learning environment. Today we are going to show the FreeNAS version of the All-in-One configuration with the Proxmox VE 4.0 environment.

Getting Started: The Hardware For this we are actually using a small Supermicro barebones that is in our Fremont colocation facility. • Barebones: (Xeon D-1540 based system) • Hard Drives: 2x • SSDs: 2x Seagate 600 Pro 240GB (boot ZFS rpool), 2x Intel DC S3500 480GB • RAM: For those wondering, why not NVMe the issue really comes down to heat. We run our hardware very hard and in this particular chassis we have had issues with NVMe drives running very hot under heavy load. For a home environment, this is less of an issue.

For a datacenter environment where the hardware is being run hard, we would advise against using small/ hot NVMe drives or hot larger drives like an Intel DC P3700 800GB/ P3600 1.6TB if you are trying to have any type of sustained workload in this tower. In terms of SSDs – the Seagate 600 Pro 240GB and 480GB drives are absolute favorites. While not the fastest, they have been rock solid in two dozen or so machines. The Intel DC S3500’s similarly work very well. The Intel DC S3500’s will eventually be utilized as independent Ceph storage devices. The Plan We are going to assume you have used the super simple Proxmox VE 4.0 installer. We are using. As a result, we do have extremely easy access to adding.

Of course, there are setups like FreeNAS and OmniOS that offer a lot of functionality. Our basic plan is the following: Proxmox FreeNAS – architecture You can get away without using the Intel DC S3500 however it is nice to be able to migrate from ZFS to the Ceph storage cluster easily. The basic idea is that we are going to create a FreeNAS KVM VM on the mirrored ZFS rpool.

We are going to pass-through the two Western Digital Red 4TB drives to the FreeNAS VM. We can then do fun things with the base FreeNAS VM image like move it to Ceph cluster storage. Should the mirrored rpool in the Proxmox VE cluster fail, we should be able to attach the two WD Red drives to another Ceph node and then pass-through the drives again to get our FreeNAS VM up and running. This can be useful if you need to recover quickly. It should be noted that running FreeNAS or other ZFS storage in a VM is far from a leading practice, and there are many reasons why you would not do this in a production cluster.

Rusifikator photoshop cc 1421. This file contains additional information such as Exif metadata which may have been added by the digital camera, scanner, or software program used to create or digitize it. Attribution Annotations This image is annotated. If the file has been modified from its original state, some details such as the timestamp may not fully reflect those of the original file. Although not required, it would be appreciated if a message was left indicating where this image was being used.

Still, for learning purposes, we thought this may be an interesting idea. The eventual goal is to simply use it as a backup target.

Again, it would be much better to simply create a zpool with the two WD Red drives in the base Debian OS then create an iSCSI target to access the drives. FreeNAS Setup in Proxmox VE with Pass-through Key to this entire setup is passing through the WD Red 4TB drives to FreeNAS.

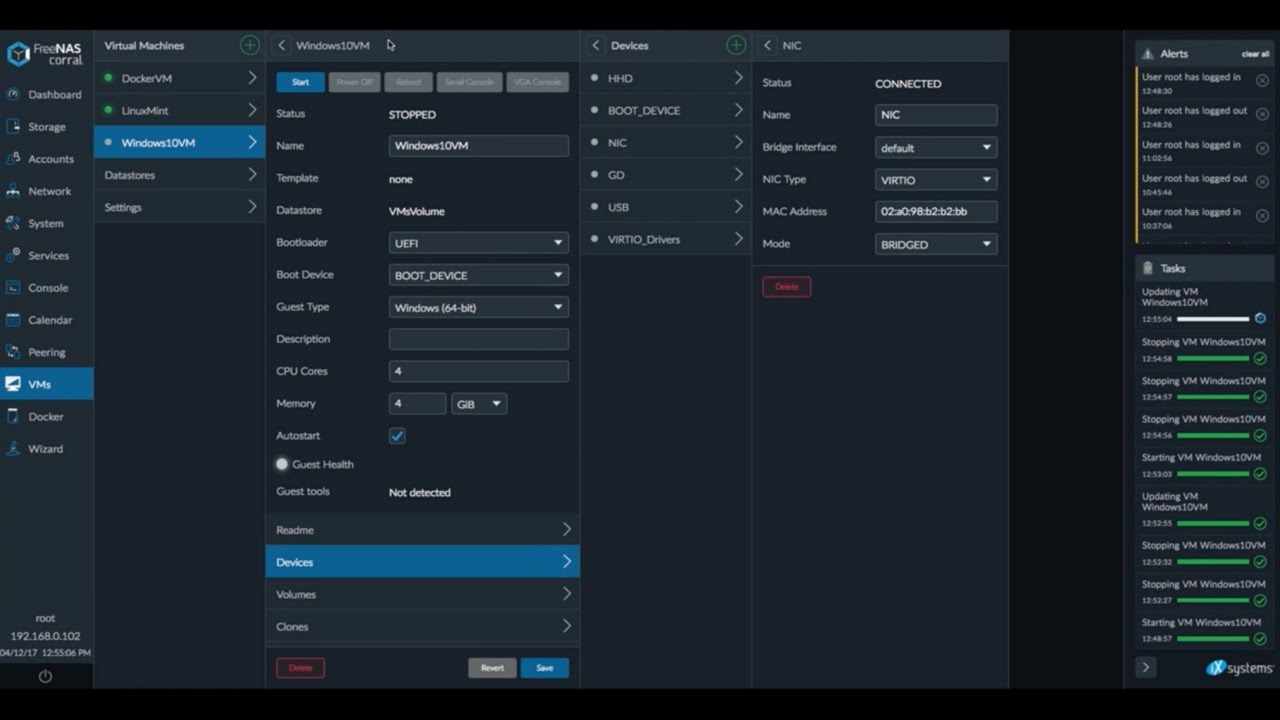

We are using FreeNAS 9.3 for this, but are eagerly awaiting FreeNAS 10. The first step is simply creating a VM for FreeNAS to work with. Proxmox FreeNAS – make base VM Here is a quick snapshot of the VM. We are actually using the disks as sata not virtio just for testing in this screenshot.

You can see that the network adapter is virtio and is working well. We are using a modest 2GB data store and 8GB of RAM. We will show how to get those two disks passed by ID later in this guide.

FreeNAS Proxmox ZFS hardware As an interesting/ fun aside here – you could actually install the FreeNAS VM on just about any storage. Here we have it running on the fmt-pve-01 node’s Intel S3710 200GB mirrored zpool. As you can see, the VM is running off of the storage on fmt-pve-01. This is the same as we can do with our Ceph storage. Example FreeNAS over Proxmox ZFS shared storage One can download the FreeNAS installer to local storage. Here is a quick tip to. You can then simply run the FreeNAS installer and everything will work fine.

Recent Pages

- Yaaron Dosti Mp3 Download

- Solidworks Models

- Altium Library Torrent

- Anketirovanie Sotrudnikov Primer

- Download Business Environment Books For Mba Pdf Free

- Huawei P6 U06 Firmware

- Sevanthi Poo Malai Kattu Tamil Mp3 Song Download3289371

- Autocad Activation Code 2016

- Kellogg American Air Compressor Manual

- Battlefield 2 Pc Crack Free Download

- Download Free Virginia Evans Fce Use Of English 1 Key Pdf 2

- Toe Starter 3 Kryak

- Download Update Modoo Marble Terbaru Edisi

- Karma Karaoke Keygen Torrent

- Install Sticky Notes Windows Server 2008